Three Aspects to See the Differences Between GPU and CPU Rendering (2)

The fast cloud rendering services provider and GPU & CPU render farm, Fox Renderfarm will still share with you the difference between GPU and CPU rendering.

2. The Magic Inside of CPU and GPU

The above picture origins from the NVIDIA CUDA document. The green color represents computing units, orange-red color represents storage units, and the orange color represents control units.

The GPU employs a large number of computing units and an extremely long pipeline, with only a very simple Cache-free control logic is required. But CPU is occupied by a large amount of Cache, with complex control logic functionality and lots of optimization circuits. Compare to GPU, CPU’s computing power is only a small part of it.

And that’s most of the GPU's work look like, large work load of computation, less technique but more repetitive computing required. Just like when you have a task of doing the number calculation within 100 for a hundred million times, probably the best way is to hire dozens of elementary school students and divide the task, since the task only involve repetitive work instead of highly-skills requiring. But CPU is like a prestigious professor, who is proficient in high-level of math field, his ability equals to 20 elementary students, he gets higher paid, of course.

But who would you hire if you are Fox Conn’s recruiting officer? GPU is like that, accumulates simple computing units to complete a large number of computing tasks, while based on the premise that the tasks of student A and student B is not dependent on each other. Many problems involving large numbers of computing have such characteristics, such as deciphering passwords, mining, and many graphics calculations. These calculations can be decomposed into multiple identical simple tasks. Each task can be assigned to an elementary school student. But there are still some tasks that involve the issue of "cloud." For example, when you go to a blind date, it takes both of the parties’ willingness to continue for further development. There's no way to go further like getting married when at least one of you are against this. And CPU usually takes care of more complicated issue like this.

Usually we can use GPU to solve the issue if it’s possible. Use the previous analogy, the speed of the GPU's computing depends on the quantity of students are employed, while the CPU's speed of operation depends on the quality the professor is employed. Professor's ability to handle complex tasks can squash students, but for less complex tasks with more workload required, one professor still can not compete with dozens of elementary students. Of course, the current GPU can also do some complicated work, which is equivalent to junior high school students’ ability. But CPU is still the brain to control GPU and assign GPU tasks with halfway done data

3. Parallel Computing

First of all, let's talk about the concept of parallel computing, which is a kind of calculation, and many of its calculation or execution processes are performed simultaneously. This type of calculation usually can divide into smaller tasks, which can then be solved at the same time. Parallel computing can co-process with CPU or the main engine, they have their own internal storage, and can even open 1000 threads at the same time.

When using the GPU to perform computing, the CPU interacts mainly with the following:

-

Data exchange between CPU and GPU

-

Data exchange on the GPU

In general, only one task can be performed on one CPU or one GPU computing core (which we usually call “core”) at the same time. With Hyper-Thread technology, one computing core may perform multiple computing tasks at the same time. (For example, for a dual-core, four-thread CPU, each computing core may perform two computing tasks at the same time without interruption.) What Hyper-thread do is usually double computing core’s logical computing functionality. We usually see that the CPU can run dozens of programs at the same time. In fact, from a microscopic point of view, these dozens of programs are still dependent and serial computing supported, such as on four-core and four-thread CPU. Only four operations can be performed at a time, and these dozens of programs can only be executed on four computing cores. However, due to the fast switching speed, what is shown on the macroscopic is the superficial fact that these programs are running “simultaneously”.

The most prominent feature of GPU is: large numbers of computing cores. The CPU's computing core is usually only four or eight, and generally does not exceed two digits. The GPU computing core for scientific computing may have more than a thousand calculation cores. Due to the huge advantage of the number of computing cores, the number of computations that the GPU can perform far outweighs the CPU. At this time, for those calculations that can be performed in parallel computing, using the advantages of the GPU can greatly improve the efficiency. Here let me explain a little bit of the task's serial computation and parallel computation. In general, serial computing is doing the calculation one by one. In parallel computing, several parallel computing is to do it simultaneously. For example, to calculate the product of real number a and vector B=[1 2 3 4], the serial calculation is to first calculate aB[1], then calculate aB[2], then calculate aB[3], and finally calculate aB[4] to get the result of aB. The parallel calculation is to calculate aB[1], aB[2], aB[3], and a*B[4] at the same time and get a *B results. If there is only one computing core, then four independent computing tasks cannot be executed in parallel computing, and can only be calculated in serial computing one by one; But if there are four computing cores, four independent computing tasks can be divided and executed on each core. That is the advantage of parallel computing. Because of this, the GPU has a large number of computing cores, and the scale of parallel computing can be very large, computing problems that can be solved by parallel computing, which showing superior performance over CPU. For example, when deciphering a password, the task is decomposed into several pieces that can be executed independently. Each piece is allocated on one GPU core, and multiple decipher tasks can be performed at the same time, thereby speeding up deciphering process.

But parallel computing is not a panacea, it requires a premise that the problems can be executed in parallel computing only when they are able to be decomposing into several independent tasks, which many of them are not able to be decomposed. For example, if a problem has two steps, and the second step of the calculation depends on the result of the first step, then the two parts cannot be executed in parallel computing and can only be sequentially executed in sequence. In fact, our usual calculation tasks often have complex dependencies that can not be parallel computed. This is the big disadvantage of the GPU.

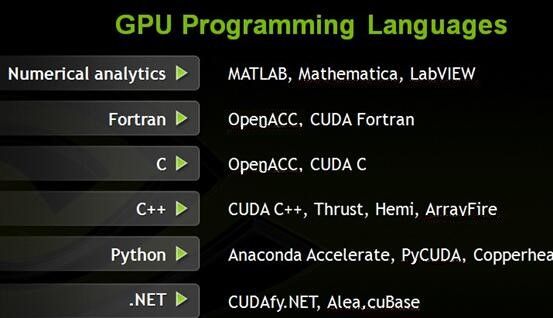

About GPU programming, there are mainly the following methods:

Fox Renderfarm hopes it will be of some help to you. It is well known that Fox Renderfarm is an excellent cloud rendering services provider in the CG world, so if you need to find a render farm, why not try Fox Renderfarm, which is offering a free $25 trial for new users? Thanks for reading!

Recommended reading

Top 9 Best And Free Blender Render Farms of 2025

2024-12-30

Revealing the Techniques Behind the Production of Jibaro "Love, Death & Robots", Which Took Two Years to Draw the Storyboard

2025-02-10

Top 10 Free And Best Cloud Rendering Services in 2025

2025-03-03

Top 8 After Effects Render Farm Recommended of 2025

2025-02-10

Top 5 Best and Free 3d Rendering Software 2025

2025-02-10

Shocked! The Secret Behind Using 3D to Make 2D Animation was Revealed!

2025-02-10

How to Render High-quality Images in Blender

2024-12-04

Easy Cel Shading Tutorial for Cartoon in Blender Within 2 Minutes

2025-02-10

Partners

Previous: V-Ray 3.4 For Sketchup To Make A Work "Container Cabin"

Next: Three Aspects to See the Differences Between GPU and CPU Rendering (1)

Interested