Fox Renderfarm Blog

Learn How to Make a Handheld Fan in 3D

3D Tutorial

Today, Fox Renderfarm, the industry's leading cloud rendering service provider and render farm, will bring you a 3D tutorial that explains how to make a handheld fan. Let's get started right now.First import the image, use the straight line tool to draw the length of the handle, then use the rotational molding tool to create the handle and add a cover.Generate a rectangle using the center point, adjust it to the appropriate size, and then generate a circular runway. At this point, use the fitting tool to get the appropriate shape.Select the circular runway that was just generated, hold down Shift to extrude the faces on both sides and add a cover, then use the shell tool to shell both sides.Copy the inner edge line of the shell, extrude the face and add the cover, pull off the inner face to keep only the outer side, and then chamfer to generate the outer layer of the shell that needs to be hollowed out.Use curves to draw the edge shape of the connecting axis, then use rotational molding to generate the surface, and then add the cover to generate the solid.Connect the rectangle diagonal, use the diagonal to generate a round tube, and adjust the angle and thickness of the tube so that the angle and thickness of the tube match the reference picture.Draw a diagonal line again and use the Line Array tool to array along this line, where the number of arrays is 18.Use the object intersection line function to select the round tube and the shell to be hollowed out, determine whether the position matches by the object intersection line, adjust the position and then cut to get the hollowed out object.Use the Rectangle tool to generate a runway circle, adjust it to the right size, then cut and combine it with the hollow object and offset it inward to get the solid. The same can be done for the outer runway circle, here you need to make a copy of the hollow object for backup.Use the mirror tool to mirror the hollowed-out model made in the previous step to the back, then use the method in the fourth step to get an unhollowed-out shell, generate a rounded rectangle and cut it according to the second reference picture, then use the combination tool to combine, and finally offset the surface to get the solid.Use a rectangle to frame the size of the button, then use a straight line to connect the midpoint of the rectangle, next use the center point tool to generate a circle, and squeeze the circle to the right size and adjust the height of the button.Split the button and the handle for spare, and then chamfer the top of the handle for the next step.For the base, again using the rotational molding tool. First draw the edge shape using curves, then rotate the shape and cap it to create a solid.Now perform the Boolean split between the handle and the base, then detach the surface. Next, copy the edge line, move the inner circle downwards, use the double rail sweep to generate the surface and combine it to obtain the base shape.Use the center point circle and rectangle tools to generate the button and indicator light shapes on the handle, extrude the solid and then perform a boolean split with the handle to get the handle shape and the indicator light.Use the Rectangle to create the runway circle and rotate it 45° to get the "x" below, then use the Trim tool to trim off the excess lines and combine them. After extruding the surface, use the Boolean split tool to split it to get the "x" icon.Now create the circular texture on the button. First abstract the structure line to get a button-sized circle, then generate a circle solid at the circle node, and use the Array Along Curve tool to make an array. Arrange the five columns in sequence according to the image and mirror them to get the desired texture. Finally, we use Boolean split to get the button shape.Chamfer the intersection of the button and the handle, and chamfer the intersection of the handle and the base.Use the curve to draw the fan shape, then use the XN tool to generate the surface, and array along the center point. The number of arrays here is 5. Adjust the fan blade position and extrude the fan blade solid.Check the model and chamfer it to complete the model.The next step is to render the product. First, divide the product into four layers, one for the orange object, one for the flesh-colored object, one for the metal connection, and one for the self-illumination. Then start rendering.First adjust the model position by aligning the model to the ground in the Advanced Options.Set the model materials to the model in turn. Note that you need to turn down the metallic shine of the metal joints in order to get a frosted look.Adjust the self-luminous material on the handle to the right intensity in accordance with the light, and choose white as the color.Set the setting options in the image to Press Exposure, High Contrast, and Photography.Change the background color in the environment settings. Use the straw tool to absorb the image color, turn down the brightness of one light in the HDR editor, hit the light on the hollow surface, adjust the shape of the light to rectangle, and then hit a main light on the left side of the product to make a shadow appear on the right side.Adjust the object position in the camera, lock the camera, and finish the rendering.Source: YBW

How to Speed Up 3D Rendering?

3D Rendering

As the last procedure to complete the CG work, 3D rendering is time-consuming and patience-consuming, but very important. Many newcomers to the 3D industry may not understand why 3D scenes take so long to render, and what factors are related to the speed of 3D rendering. In this article, Fox Renderfarm, the leading cloud rendering service provider and render farm in the CG industry, will discuss with you how to improve the speed of 3D rendering.3D rendering is slow because it involves various aspects working together, such as your software, renderer, graphics card, RAM, etc., so it takes a lot of calculations to form the final render. So the rendering speed is actually mainly related to your computer configuration, the software used and the scene or model, which are several factors.How to Improve 3D Rendering Speed?1. Upgrade Your MachinesRendering relies heavily on the CPU performance and RAM of your computer. Low computer specs will result in long rendering times. If you want to render faster, you need a more powerful processor, as well as upgrading your RAM.2. Upgrade Your SoftwareVarious 3D software and their renderers basically receive an update at regular intervals, and each update results in a faster and better rendering experience. So if you have the idea to speed up rendering, it is better to update all your software to the latest version. Of course, it is worth noting that in addition to the optimization of rendering features, other functions or stability of the new version should also be taken into consideration.3. Optimize Your Scenes and ModelsRemove or hide models, objects, and faces that are not visible to the camera.Adjust mapping, reduce the use of shadow mapping, remove displacement mapping, and use geometric and bump mapping whenever possible.Reduce lighting and optimize lighting parameters.4. Use Cloud Rendering to Speed Up RenderingThe magic of cloud rendering lies in the ability to use multiple machines at the same time to help you render your scene, and it in the end compresses hours of rendering time into minutes.If you have to maintain high quality rendering and it causes long rendering time, it is also recommended to use cloud rendering farms to help you out with batch rendering and extremely fast rendering in the cloud. Of course, there are many render farms on the market, and we recommend you to experience them all. Many render farms offer a certain amount of free trial, so you can use them just by signing up.The leading cloud rendering service provider and render farm in the CG industry, Fox Renderfarm offers a $25 free trial for new users who sign up. Fox Renderfarm has helped render the work of countless clients worldwide, so if you are still plagued by rendering times for your current 3D projects and want to speed up rendering quickly, try Fox Renderfarm!

Top 9 Free And Best 3D Modelling Websites in 2025

3D Rendering

Do you want to learn 3D modeling? There are some 3D modeling software that are very powerful, but often require you to pay for them. Blender is a free 3D software. But if you don't want to download the program and just model, then get to know these 3D modeling websites that allow you to model online.The best cloud rendering services provider and GPU & CPU render farm, Fox Renderfarm will share with you 9 best and free 3D model sites.What is Cloud Rendering or Cloud-based Rendering?Top 10 Free And Best Cloud Rendering Services1. SketchUp - Free 3D Modeling WebsiteSketchUp is a powerful 3D modeling software for a wide range of drafting and design applications, including architecture, interior design, etc. SketchUp Free is free web-based 3D modeling software capable of making anything you can imagine without having to download anything. But SketchUp Free is more limited than paid versions like SketchUp Go, SketchUp Pro, and SketchUp Studio. However, it is still a very good and free 3D modeling website.2. Tinkercad - Free 3D Model SiteTinkercad is a free web application for 3D design, electronics and coding from Autodesk. It is a very popular online 3D modeling website, with a friendly interface and very easy to use. In addition, it is a popular platform for creating 3D printed models, as it can easily export STL files for 3D printing.3. Vectary - Online 3D Modeling SiteVectary is a free 3D modeling tool designed to help create, share and customize 3D designs. You don't have to download or code, you can do 3D modeling directly in your browser. One feature of Vectary is that you can use AR to enhance reality. If you need to request a workspace, then a paid version is available for you to choose from, but the basic version is always free.4. Model Online With FiguroFiguro is a free online 3D modeling tool for 3D artists, game developers, designers and more. With Figuro you can create 3D models quickly and easily.5. Free 3D Modeling Site - SelfCADSelfCAD is a 3D modeling software that allows you to design, model, sculpt, sketch, render, and animate in 3D. He offers a free 3D modeling software program for students and hobbyists. With SelfCAD you can also do 3D printing easily.6. Third Design - 3D Modeling WebsiteThird Design is a 3D modeling and 3D animation creation software which is online and free. You can use it to quickly create 3D models and 3D animations. He provides free models to help you do 3D modeling easily. If you want others to give comments on your 3D designs, you can share an unlisted link to them so you can gain meaningful comments. Third Design's basic version is free and a more advanced paid version is available for professionals and businesses.7. SculptGL - Free 3D Model SiteSculptGL is a free 3D modeling website and application focused on 3D sculpting. It allows you to create characters from basic shapes. The process of sculpting a character looks like sculpting clay. But with this 3D online modeling software it helps you to bring your characters to life.8. 3DSlash - Easy 3D Model Site3D Slash is a 3D modeling program that is very easy to learn and use, with a simple interface. 3D Slash works in a way that makes it feel like a Minecraft game. Users use a set of tools to create models by "cutting cubes". 3D Slash is web-based, but of course the desktop version is also available. The basic version is free.9. Online 3D Modeling With Clara.ioClara.io is a full-featured and free online 3D modeling, animation and rendering software that runs in your web browser. It supports rendering in the cloud using VRay, which allows you to generate realistic renderings.Fox Renderfarm hopes you can find the best 3D modeling website to create your own 3D work! It is well known that Fox Renderfarm is an excellent cloud rendering services provider in the CG world, so if you need to find a render farm for 3D program, why not try Fox Renderfarm, which is offering a free $25 trial for new users? Thanks for reading!

What is the difference between pre-rendering and real-time rendering?

3D Rendering

Pre-Rendering vs Real-time Rendering"Avatar" directed by James Cameron spent four years and nearly 500 million US dollars to open a new world of science fiction for everyone. The CGI characters, Names, in the film look exactly the same as the people in the real world. And the realistic sci-fi scenes are shocking. However, these wonderful images are inseparable from the CG artists and pre-rendering technology.In order to solve the rendering tasks of "Avatar", the Weta Digital supercomputer processes up to 1.4 million tasks per day to render movies, running 24 hours a day with 40,000 CPUs, 104TB memory and 10G network bandwidth. It took 1 month in total. Each frame of "Avatar" needs to be rendered for several hours, 24 frames per second. Hence, the powerful rendering cluster capability is really important to the CG studio.What is pre-rendering?Pre-rendering is used to create realistic images and movies, where each frame can take hours or days to complete, or for debugging of complex graphics code by programmers. Pre-rendering starts with modelling, using points, lines, surfaces, textures, materials, light and shadow, visual effects and other elements to build realistic objects and scenes. Then, computing resources are used to calculate the visual image of the model under the action of factors, such as viewpoint, light, and motion trajectory according to the predefined scene settings. The process is called pre-rendering. After the rendering is completed, the frames are played continuously to achieve the final effect.It is mainly used in the fields of architecture archive, film and television, animation, commercials, etc., with the focus on art and visual effects. In order to obtain ideal visual effects, modelers need to sculpt various model details during the production process; animators need to give the characters a clever charm; lighting artists need to create various artistic atmospheres; visual effects artists need to make visual effects realistic.Commonly used pre-rendering software including 3ds Max, Maya, Blender, Cinema 4D, etc., which are characterized by the need to arrange the scene in advance, set the relevant rendering parameters, such as shadow, particle, anti-aliasing, etc.), and then use a PC or render farm to render with unsupervised calculation.BTW, you can use a local machine or a cloud render farm for rendering. Fox Renderfarm can provide rendering technical support for the software mentioned above.Each frame in the pre-rendered scene is present. Once the rendering is started, each frame takes several seconds, minutes or even hours to render. A large amount of memory, CPU/GPU, and storage resources are consumed during the rendering process, which is a computing resource-intensive application. Especially in film and television projects, there are usually scheduled requirements so that rendering tasks need to be completed within a specified time. Currently, tasks are basically submitted to cloud rendering farms for rendering. Cloud rendering farms, such as Fox Renderfarm, are professional service companies that can provide massively parallel computing clusters.After pre-rendering, the task is basically the finished work that has been rendered. If you want to calculate and see the scene in real time on an operable online service or online game, we have to talk about real-time rendering.What is real-time rendering?In August 2020, a live demonstration of the action role-playing game "Black Myth: Wukong" produced by Game Science Corporation from China became popular in Chinese social networks. The top-notch pictures, rich details, immersive combat experience, and sufficient plot interpretation in the demonstration restore an oriental magical world. Every beautiful scene in the game is rendered in real time.Real-time rendering is used to interactively render a scene, like in 3D computer games, and generally each frame must be rendered in a few milliseconds. It means that the computer outputs and displays the screen while calculating the screen. Typical representatives are Unreal and Unity. Games like Game Science are built using Unreal Engine 4. The characteristic of real-time rendering is that it can be controlled in real time and is very convenient for interaction. However, the disadvantage is that it is limited by the load capacity of the system. And if necessary, it will sacrifice the final effect, including model, light, shadow and texture, to meet the requirements of the real-time system. Real-time rendering can currently be applied to 3D games, 3D simulations, and 3D product configurators and others.Real-time rendering focuses on interactivity and real-time. Generally, scenes need to be optimized to increase the speed of screen calculation and reduce latency. For the user, every operation, such as a finger touch or click the screen, will make the screen to be recalculated. And the feedback needs to be obtained in real time. Thus, real-time rendering is very important. In simulation applications, the data shows that only if the latency is controlled within 100ms, people will not obviously perceive the inconsistency of video and audio.In recent years, with the improvement of GPU performance, the speed of real-time calculation is getting faster; and the accuracy of the calculation images is getting higher. Especially with the application of Ray-tracing and other technologies, the effect of real-time rendering becomes more realistic. These top technologies are also obvious trends in future production. If you want to learn more about the real-time rendering, please feel free to contact us.

What is CPU Rendering?

3D Rendering

With more and more CG special effects movies such as "Alita", "The Lion King" and "Frozen 2", CG production has gradually become well known. As an integral part of production, CG rendering has also received more and more attention. 3D rendering is the last step of CG production (except, of course, post-production). It is also the stage that finally makes your images conform to the 3D scene. And then, people will find that there are CPU rendering and GPU rendering. So what is CPU rendering? What does the CPU do during rendering?The principle of 3D rendering varies according to different rendering software, but the basic principle is the same: the CPU calculates the parameters set in the model according to the calculation method set by the rendering software, including: from a specific angle to look at the model, lighting, distance, blanking/occlusion, Alpha, filtering, and even paste the texture as it should, so that the digital model is transformed into a real visualization, and then the graphics card will display this picture.And 3D rendering requires a lot of complicated calculations, so it needs a powerful processor. For a simple example, a beam of light illuminates an apple, so where does its shadow face, how big is the shadow, and what does the shadow look like after a lot of complicated calculations. The CPU can solve this problem, and it only has a high frequency. CPU with more cores can get the rendering faster. So if you configure a computer for design, invest as much money as possible in the processor. The CPU is extremely sensitive to the number of cores, and features such as multi-cores have huge performance improvements. If the funds are sufficient, the more cores the better. In addition, the requirements on the response speed of memory and hard disk are also relatively high.So, does 3D rendering rely on the CPU or graphics card? It's actually very simple, depending on what kind of rendering software is used:1. Traditional CPU arithmetic rendering software: such as V-Ray, Arnold, etc. They are software that uses the CPU for rendering, and almost all CPU rendering software can well support the multi-threading of the CPU, that is, the more the cores, the higher the rendering efficiency. Moreover, the number of cores with the same frequency and cache is doubled, and the rendering speed is almost doubled.2. GPU renderers such as Octane, Redshift, RenderMan, etc., greatly reduce their dependence on the CPU. In GPU rendering software, the graphics card determines the level of rendering efficiency.For example, "Gravity" or "Guardians of the Galaxy" and "Avengers" are also produced using the CPU renderer Arnold. It can render very realistic, very high-quality, cinematic images with highly controllable results (which is important).If you use a CPU rendering software, do not hesitate, a multi-threaded, high frequency, large cache CPU is definitely a strong guarantee to greatly improve work efficiency! If you are using GPU rendering software, then GPU is the right choice! But whether it is CPU rendering or GPU rendering, the render farm is a good choice. Just like Fox Renderfarm, it provides massive render nodes, allowing you to get your 1-month project rendered as fast as in 5 min. Why not try it?

How to Use KeyShot 9 to Render Christmas Scene - Tutorial

Keyshot

This article is a 3d rendering tutorial about Christmas scene shared by 3d artist Drown. The article is organized by Fox Renderfarm, the leading cloud render farm in the CG industry. Because there are so many items in the scene, this tutorial will focus on the lighting of the scene and the skills to create a Christmas atmosphere, and the adjustment of the material will be briefly described.Use KeyShot 9 to open the placed Christmas scene, add a camera and adjust the appropriate angle and save. The next work is to give the main body a crystal ball of glass material, and change it to the product mode in the lighting mode, so that light can pass through the glass ball to illuminate the internal details.The tablecloth in the scene and the curtains in the background were selected from red velvet material. The official velvet material of KeyShot 9 was selected to bring out the atmosphere of the Christmas scene.Next, we need to find a suitable HDR map. Because it is a Christmas scene, we need an indoor HDR map with many small bright light sources to simulate the scene atmosphere of the decorative chandelier on Christmas eve. After using the HDR map, rotate it to an appropriate angle to adjust the brightness, contrast, hue, saturation, etc. of the picture to make the scene warm and cold.Enter the image panel, change the image style to photography, adjust the exposure, contrast, etc., to further enhance the warm and cold, light and dark contrast of the scene.Next, open the HDR canvas and manually add a small warm light source to cover the cold-light incandescent light on the original image to further strengthen the Christmas Eve atmosphere. Pay attention to keep the cold light in the scene properly to avoid the scene being too warm. At the same time, the brightness of the small warm light source should be adjusted appropriately and the blending mode changed to ALPHA to ensure that the cold-light incandescent lamp can cover the original image.In order to highlight the crystal ball of the subject, add a sphere to the scene and set the material as a spotlight. After adjusting the position, illuminate the crystal ball.Further fine-tuning various lighting parameters, the atmosphere of the picture is already in place at this time, but now the picture is a little too warm and lacks a bit of realism.Add a cool rectangular light on the right side of the screen to reconcile the warm atmosphere of the screen, so that the screen effect will not be too false. Here we must pay attention to changing the blending mode of rectangular light to blending to avoid the glass ball reflecting pure white rectangular light directly and causing the realism of the picture to be lost.After the atmosphere of the screen comes out, you can start adjusting the texture. Start with the snow in the crystal ball. Use the noise texture node to add bumps to the snow and snow colors on the surface. If the screen freezes, you can hide other items in the scene, including the spotlight.Next, use the matching map to adjust the material of the lounger inside the crystal ball. Anything can be placed here, not necessarily the chair, as long as it is a suitable material and color. It is recommended to use a brighter color scheme to match the atmosphere of the scene.Next adjust the texture of the Christmas tree.After lighting the bulb material on the Christmas tree, observe the current effect. Note that the glass of the crystal ball must be given a transparent glass material, otherwise the light will not find the scene inside the crystal ball and let the light penetrate into the interior scene.Use the matching wood texture map to simply adjust the wood texture base material, and adjust it according to your favorite, such as using a black plastic base.Then open the texture of the glass ball, add the fingerprint texture that is official with KeyShot 9, and after adjusting the position size, turn off the texture repeat, and add the diffuse reflection texture as the fingerprint texture. After that, the fingerprint texture is connected to the label, and the texture is used as the color of the texture. Here, the brightness must be adjusted, and it should be reduced as much as possible to make the fingerprint faintly visible.Use scratched maps, adjust the contrast with color, and control the transparency effect on the glass. Repeat this step to make scratches and bumps on the glass. The effect of the scratches is a little bit, otherwise the glass will look fake.Next use to adjust the texture on the crystal ball name tag.Then use PS to edit a piece of text, pay attention to use a black background, pure white fonts, and use horizontal composition. After saving the black and white picture, use the 3D--Generate Normal Map option in the PS filter to generate a bump texture.Drag the texture to the texture of the brand, create a new texture as the material of the lettering, and adjust the color according to your preference. After adjusting the texture, the black and white image is used as the opacity, and the normal texture is used as the bump. Pay attention to the normal To complete the adjustment of the brand-name texture.Simply adjust the material of the board, and the color matching needs to be darker to avoid the board being too conspicuous. Then use the fuzzy nodes in the geometry to make fluff on the tablecloth. The surface material of the nodes can directly use the original red velvet material.In order to prevent fluff from passing through the model, you can simply render a top view, and then draw a black and white texture according to the position of the item in PS. Use a Gaussian blur filter to blur out the edges of the black and white texture. Then use the texture map as the length texture of the fur node. The length of the fluff where the texture is black is 0. After the geometry node is re-executed, the fluff will not pass through the model.The curtain can use the color gradient node. Change the gradient type to the viewing direction to enhance the volume of the curtain fabric. The background wall is not easy to be too dark or too bright, otherwise the background is too eye-catching and will destroy the atmosphere of the scene.The use of glass as the texture of the candle's wrapping paper can reduce the refractive index to make the texture of transparent plastic wrapping paper. Later, the translucent material is used to adjust the candles, and the color matching can be free.To color various gift boxes, it is recommended to color each gift box, and cool colors are recommended to use blue to correspond to the cold light atmosphere in the scene.Next, you need to adjust the surface effect of the gift box to make the bumpy texture on the gift box. The effect does not need to be too complicated, it can be simpler.Next, add a sphere to the inside of the crystal ball to make a snow effect inside the crystal ball. Finally, fine-tune the composition, lighting, and then render the image.ConclusionNow you know how to render a Christmas scene in KeyShot 9, don't you? Why not give it a try?If you want your rendered scenes to become more detailed and complex, try the best CPU &x26; GPU render farm, Fox Renderfarm, who provides a very good cloud rendering service and a free $25 trial!

Three Aspects to See the Differences Between GPU and CPU Rendering (2)

3D Rendering

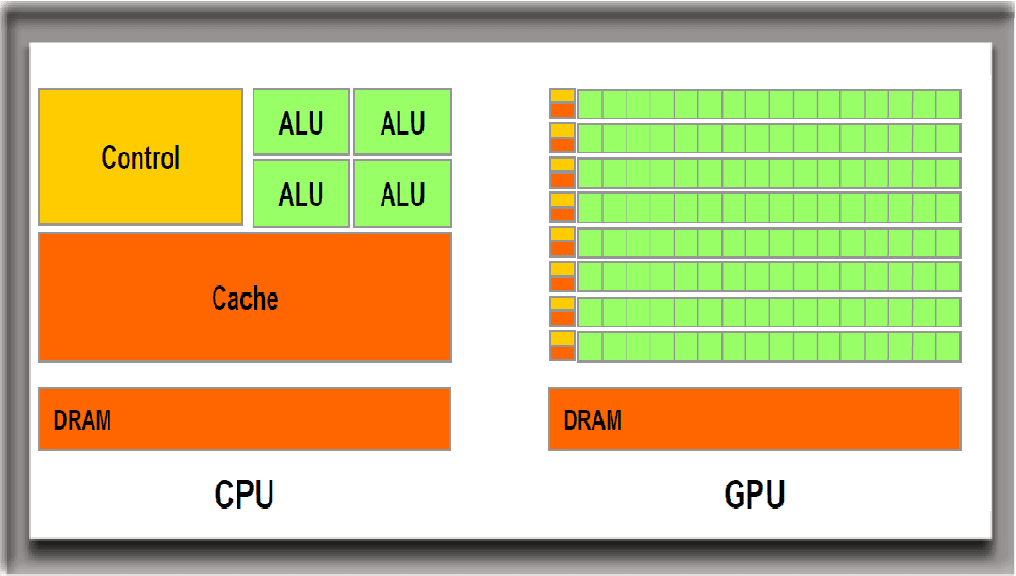

The fast cloud rendering services provider and GPU &x26; CPU render farm, Fox Renderfarm will still share with you the difference between GPU and CPU rendering.Three Aspects to See the Differences Between GPU and CPU Rendering (1)2. The Magic Inside of CPU and GPUThe above picture origins from the NVIDIA CUDA document. The green color represents computing units, orange-red color represents storage units, and the orange color represents control units.The GPU employs a large number of computing units and an extremely long pipeline, with only a very simple Cache-free control logic is required. But CPU is occupied by a large amount of Cache, with complex control logic functionality and lots of optimization circuits. Compare to GPU, CPU’s computing power is only a small part of it.And that’s most of the GPU's work look like, large work load of computation, less technique but more repetitive computing required. Just like when you have a task of doing the number calculation within 100 for a hundred million times, probably the best way is to hire dozens of elementary school students and divide the task, since the task only involve repetitive work instead of highly-skills requiring. But CPU is like a prestigious professor, who is proficient in high-level of math field, his ability equals to 20 elementary students, he gets higher paid, of course.But who would you hire if you are Fox Conn’s recruiting officer? GPU is like that, accumulates simple computing units to complete a large number of computing tasks, while based on the premise that the tasks of student A and student B is not dependent on each other. Many problems involving large numbers of computing have such characteristics, such as deciphering passwords, mining, and many graphics calculations. These calculations can be decomposed into multiple identical simple tasks. Each task can be assigned to an elementary school student. But there are still some tasks that involve the issue of "cloud." For example, when you go to a blind date, it takes both of the parties’ willingness to continue for further development. There's no way to go further like getting married when at least one of you are against this. And CPU usually takes care of more complicated issue like this.Usually we can use GPU to solve the issue if it’s possible. Use the previous analogy, the speed of the GPU's computing depends on the quantity of students are employed, while the CPU's speed of operation depends on the quality the professor is employed. Professor's ability to handle complex tasks can squash students, but for less complex tasks with more workload required, one professor still can not compete with dozens of elementary students. Of course, the current GPU can also do some complicated work, which is equivalent to junior high school students’ ability. But CPU is still the brain to control GPU and assign GPU tasks with halfway done data3. Parallel ComputingFirst of all, let's talk about the concept of parallel computing, which is a kind of calculation, and many of its calculation or execution processes are performed simultaneously. This type of calculation usually can divide into smaller tasks, which can then be solved at the same time. Parallel computing can co-process with CPU or the main engine, they have their own internal storage, and can even open 1000 threads at the same time.When using the GPU to perform computing, the CPU interacts mainly with the following:Data exchange between CPU and GPUData exchange on the GPU In general, only one task can be performed on one CPU or one GPU computing core (which we usually call “core”) at the same time. With Hyper-Thread technology, one computing core may perform multiple computing tasks at the same time. (For example, for a dual-core, four-thread CPU, each computing core may perform two computing tasks at the same time without interruption.) What Hyper-thread do is usually double computing core’s logical computing functionality. We usually see that the CPU can run dozens of programs at the same time. In fact, from a microscopic point of view, these dozens of programs are still dependent and serial computing supported, such as on four-core and four-thread CPU. Only four operations can be performed at a time, and these dozens of programs can only be executed on four computing cores. However, due to the fast switching speed, what is shown on the macroscopic is the superficial fact that these programs are running “simultaneously”.The most prominent feature of GPU is: large numbers of computing cores. The CPU's computing core is usually only four or eight, and generally does not exceed two digits. The GPU computing core for scientific computing may have more than a thousand calculation cores. Due to the huge advantage of the number of computing cores, the number of computations that the GPU can perform far outweighs the CPU. At this time, for those calculations that can be performed in parallel computing, using the advantages of the GPU can greatly improve the efficiency. Here let me explain a little bit of the task's serial computation and parallel computation. In general, serial computing is doing the calculation one by one. In parallel computing, several parallel computing is to do it simultaneously. For example, to calculate the product of real number a and vector B=[1 2 3 4], the serial calculation is to first calculate aB[1], then calculate aB[2], then calculate aB[3], and finally calculate aB[4] to get the result of aB. The parallel calculation is to calculate aB[1], aB[2], aB[3], and a*B[4] at the same time and get a *B results. If there is only one computing core, then four independent computing tasks cannot be executed in parallel computing, and can only be calculated in serial computing one by one; But if there are four computing cores, four independent computing tasks can be divided and executed on each core. That is the advantage of parallel computing. Because of this, the GPU has a large number of computing cores, and the scale of parallel computing can be very large, computing problems that can be solved by parallel computing, which showing superior performance over CPU. For example, when deciphering a password, the task is decomposed into several pieces that can be executed independently. Each piece is allocated on one GPU core, and multiple decipher tasks can be performed at the same time, thereby speeding up deciphering process.But parallel computing is not a panacea, it requires a premise that the problems can be executed in parallel computing only when they are able to be decomposing into several independent tasks, which many of them are not able to be decomposed. For example, if a problem has two steps, and the second step of the calculation depends on the result of the first step, then the two parts cannot be executed in parallel computing and can only be sequentially executed in sequence. In fact, our usual calculation tasks often have complex dependencies that can not be parallel computed. This is the big disadvantage of the GPU.About GPU programming, there are mainly the following methods: Fox Renderfarm hopes it will be of some help to you. It is well known that Fox Renderfarm is an excellent cloud rendering services provider in the CG world, so if you need to find a render farm, why not try Fox Renderfarm, which is offering a free $25 trial for new users? Thanks for reading!

Linux’s Place in The Film Industry

3D Rendering

In 1991, a student named Linus Torvalds began developing a new operating system as a hobby. That hobby, which would later be called Linux, forever changed the world of computers. Since Linux is open source, anyone can license it for free and modify the source code to their liking. This has made Linux one of the most popular operating systems in the world.Linux is everywhere. The web server maintaining this page is very likely Linux based. You may have a version of Linux in your pocket right now. Google’s Android operating system is a modified version of Linux. Several world governments use Linux extensively for day to day operations. And, many would be surprised to learn that Linux has become the standard for major FX studios.In the early 90s, Hollywood studios relied on SGI and its Irix operating system to run animation and FX software. At the time, Irix was one of the best systems available for handling intense graphics. But a change was about to sweep through the computer industry. Windows began to dominate the business world, and Intel began making powerful chips at a lower price point. These market forces made expensive SGI systems hard to justify.When studios began looking for a system to replace Irix, Windows wasn’t an option due to its architecture. The proprietary software in place at many studios was written for Irix. Since Irix and Linux were both Unix based, porting that software to Linux was easier than porting to Windows. Render farms were the first to be converted. In 1996, Digital Domain was the first production studio to render a major motion picture on a Linux farm with Titanic. DreamWorks, ILM, Pixar and others quickly followed. Workstations were next for Linux once artists realized the performance boost in the new operating system. Under pressure from studios, commercial software vendors got on board and started releasing Linux compatible versions. Maya, Houdini, Softimage and other popular 3D applications quickly became available for Linux.By the early 2000s, most major studios were dominated by Linux. While Windows and Mac environments are still used for television and small independent films, practically all blockbuster movies are now rendered on Linux farms.Linux has many advantages for render farm. The obvious benefits are cost and customization. Since Linux is free to license, startup costs are greatly reduced compared to commercial systems. And, since Linux is open source, completely customized versions of the operating system are possible.There are other advantages. Linux machines can multitask well and are easy to network. But the single greatest advantage is stability. Unlike other operating systems, Linux doesn’t slow down over time. It is common for Linux machines to run for months, yes months, without needing a reboot.With all these advantages, it’s surprising to learn many online render farms still haven’t embraced Linux. While a handful of farms like Rebus, Rendersolve and Rayvision support Linux, Windows is still the most common environment for cloud rendering services.It’s not likely anything will replace Linux’s role in the film industry soon. Studios are heavily invested in Linux with millions of lines of custom code. While anything is possible, it would take another industry change akin to the PC revolution to shake Linux from its place in Hollywood.The story of Linux is almost like a Hollywood movie itself. It shows us that anything is possible. It’s hard to believe that a simple student project forever changed the world of computers and became the backbone of the film industry.About: The author, Shaun Swanson, has fifteen years of experience in 3D rendering and graphic design. He has used several software packages and has a very broad knowledge of digital art ranging from entertainment to product design.If you want to know more about 3D Rendering, Follow us on Facebook,Linkedin.

The Future of 3D Rendering is in the Cloud

3D Rendering

When we think of 3D animation, we imagine an artist sitting at a work station plugging away in software like 3DS Max or Cinema 4D. We think of pushing polygons around, adjusting UVs and key frames. We imagine the beautifully rendered final output. What we don't often think about is the hardware it takes to render our art.Illustrators may get by rendering stills on their own workstation. But, rendering frames for animation requires multiple computers to get the job done in a timely manor. Traditionally, this meant companies would build and manager their own render farms. But having the power to render animations on-site comes with a considerable price. The more computers that have to be taken care of, the less time there is to spend on animation and other artistic tasks. If a render farm is large enough it will require hiring dedicated IT personnel.Well-funded studios might buy brand-new machines to serve as render slaves. But, for smaller studios and freelancers, render farms are often built from machines too old to serve as workstations anymore. Trying to maintain state-of-the-art software on old machines is often challenging. Even when newer equipment is used, there is still a high energy cost associated with operating it. The electricity required by several processors cranking out frames non-stop will quickly become expensive. Not to mention, those machines get hot. Even a single rack of slaves will need some type of climate control.These issues have made many animation companies see the benefits of rendering in the cloud. As high-speed internet access becomes available across the globe, moving large files online has become commonplace. You can upload a file to a render service that will take on the headaches for you. They monitor the system for crashes. They install updates and patches. They worry about energy costs. Plus, there is the speed advantage. Companies dedicated to rendering are able to devote more resources to their equipment. Their farm will have more nodes. Their hardware will be more up-to-date and faster.The solution cloud rendering provides couldn't come fast enough. There seems no end to the increasing demands made on render hardware. Artists and directors are constantly pushing the limits of 3D animation. Scenes that may have been shot traditionally a few years ago are now created with computer graphics to give directors more control. With modern 3D software it's easier to meet those creative demands. Crashing waves in a fluid simulation, thousands of knights rushing towards the camera or millions of trees swaying in the wind might be cooked up on a single workstation. But, even as software improves, processing those complex scenes takes more power than ever before.It's not only artistic demands from content creators putting render hardware through its paces. The viewing public has enjoyed huge improvements to display resolution in recent years. The definition of high-definition keeps expanding. What many consider full HD at 1920 x 1080 is old news. Many platforms now support 4K resolution at 4096 x 2160. In many cases, that's large enough for hi-res printing!The public is also getting use to higher frame rates. For years, the industry standard for film has been 24 frames per second (fps). But, in 2012, Peter Jackson shot The Hobbit: An Unexpected Journey at 48 fps.While some prefer the classic look of 24 fps film, animators have to prepare for higher frame rates becoming standard.Everything is pointing towards cloud rendering becoming the norm. While studios can put up with the hassle of their own render farms, there is little need to when companies like Rayvision can do it better. Managing your own render farm could soon be as uncommon as hosting your own website. It's something that is simply better done by a dedicated company. Welcome to the age of cloud rendering.About Author :Shaun Swanson - who has fifteen years of experience in 3D rendering and graphic design. He has used several software packages and has a very broad knowledge of digital art ranging from entertainment to product design.This article posted on http://goarticles.com/article/The-Future-of-3D-Rendering-Is-in-the-Cloud/9416094/

The Evolution of 3D Rendering and Why You Need to Consider It

3D Rendering

When it comes to the world of rendering, it is easy to say that 3D rendering has by far become the most popular with graphic designers, filmmakers, architects and etc. This rendering is gradually changing the dynamics of engineering and creative graphic industries.This type of rendering manipulates images in a three dimensional manner, promoting a more realistic visual effect. Here are few of the many reasons why you should consider using a cloud rendering for 3D rendering.Reduced Design Cycles3D rendering can make solid modeling possible which eventually shortens the design cycle and ultimately results in a streamlined manufacturing process. Using cloud rendering services for 3D visuals leaves you with a highly qualified team that can bring out the best of your project.High Quality with Low CostsOne of the best reasons to consider 3D rendering is that you will be not only able to save time, but also acquire a quality 3D product that can make waves. Moreover, the costs involved in this type of rendering are significantly lower. What more could you want when you have access to the best quality services in the market at a cost effective rate!Online Publications3D rendered projects can easily be published online, without hassle. These can help you attract attention via social media and access to a potential clientele who can pay top dollar for your images.At Fox Renderfarm we offer pioneering self-service cloud computing for rendering, research into cluster rendering, parallel computing technology and computing services for cloud rendering.If you are considering keeping up with technology by using 3D rendering in your projects, cloud is the way to go! If you are considering the services of online cloud rendering for your project, or even Fox Renderfarm, please contact us via our Live Chat at the bottom right corner of our website or at service@foxrenderfarm.com and we will be happy to be of service!

Why do you need to use a render farm?

3D Rendering

With the rapid development of 3D movies in recent years, VFX movies have also received more and more attention. From the point of view of the box office of European movies, the top 5 movies Avengers: Endgame, Avatar, Titanic, Star Wars: The Force Awakens, Avengers: Infinity War. They are almost inseparable from VFX production. And Disney relied on the 3D animated film Frozen series, sweeping $ 2.574 billion in the world, creating a new record for the global animated film box office.image via internetWhy do you need to use a render farm? Rendering is indispensable behind these movies, and rendering is almost done by various render farms of all sizes.Rendering is the later part of 3D animation production, and rendering is a very time-consuming step in the later stage. An 80-minute animated movie can often be rendered in thousands to tens of thousands of hours.In the animated film COCO, 29,000 lights were used in the scene of the train station; 18,000 lights were in the cemetery; 2000 RenderMan practical lights were used in the Undead World. The production team used the RenderMan API to create 700 special point cloud lights, which expanded to 8.2 million lights. These massive lights are a nightmare for the rendering artist. For the production requirements, the test can not complete such a huge rendering. They once tested rendering and found that these light-filled shots actually took 1,000 hours per frame! They continued their research and shortened it to 125 hours and 75 hours. The rendering time of one frame at the time of final production was 50 hours and one frame. The movie is 24 frames per second, 1440 frames per minute, if a movie is calculated as 90 minutes. That 1440 frames * 90 minutes = 129600 frames, 129600 * 50 = 6480000 hours. Converted into adulthood is 740 years?image via internet"To render Avatar, Weta Digital used a 10,000-square-foot server farm. There are 4,000 servers with a total of 35,000 processor cores. The average time to render a single frame is 2 hours, 160 minutes of video. The overall rendering time takes 2880000 hours, which is equivalent to 328 years of work for one server! "image via internetIf the rendering work is only on a general computer, this is almost impossible. So the production team created its own render farm, powerful machine performance and huge number to complete such a huge amount of work.For small teams, studio, freelancer, and individual producers, rendering take the same time. If they want to spend a lot of time using the computer for rendering work, this is undoubtedly a time-consuming thing, and they are unacceptable. These various reasons, coupled with uncontrollable factors such as computer crashes, special effects artists often cannot complete the work within the time specified by the producer due to rendering problems. Moreover, the labor, material, and electricity required to maintain computer rendering. The cost is also a big fee for the production company!So it is very necessary to work with some render farms. Not only can it save the time of the production staff, but also can use a large number of machines in a limited time to complete the work in a short time.The emergence of cloud rendering technology makes the render farm more mature. Cloud rendering is to upload materials to the cloud and use this cloud computing system of the rendering company to render remotely.Another advantage of cloud rendering is that small studios can also be responsible for rendering high-quality materials. In the film industry, this technological change is no less than the change that cloud computing itself brings to the IT industry.Taking Fox Renderfarm as an example, no matter where the special effects technician is, they can use cloud rendering services to complete the "trilogy": 1. Upload your project; 2. Wait for the rendering to complete; 3. Download to the local machine. In addition, they can call more machines according to their urgency, and they can arrange the order of rendering and monitor the progress of rendering at any time. At the same time, the price is relatively low, which is accepted by many film and television special effects companies, rendering studios, and individual designers. So, why not have a free trial on Fox Renderfarm now? to experience the amazing cloud rendering services to speed up your rendering.

Fox's Got Talent 3D ‘Easter Egg’ Challenge

3D Rendering

Wanna show your talent on the world stage while winning big prizes?Today’s the day! Share your fun stories or crazy ideas about ‘Easter Eggs’ through 3D renders with us! The Top 3 artworks will be featured and promoted in multiple online channels, and their authors will get a good deal of render coupons from the world-leading cloud render farm!Fox's Got Talent 3D ‘Easter Egg’ ChallengeTheme: Easter EggSpring has sprung, Easter is coming soon. Speaking of Easter eggs, you may have the image of rabbits holding colorful eggs, or you may think about the variety of candies and chocolates. And if you are a movie fan, lots of hidden surprises will conjure up in your mind… Whatever Easter Egg means to you, set your imagination free, create a 3D render, and tell us your story.Enjoy your creation and happy render!TimeTime for entries: Feb. 26th - Mar. 30th(UTC+8)Winners announcement time: Apr. 6th(UTM+8)Prizes3 artworks will be selected and awarded with fast and easy cloud rendering services provided by Fox Renderfarm.1st Place:Fox Renderfarm: render credits worth US $5002nd Place:Fox Renderfarm: render credits worth US $3003rd Place:Fox Renderfarm: render credits worth US $200Besides, the winning artworks will gain a great amount of exposure and publicity.Interview with Fox RenderfarmAdvertisement and promotion on our official website, social media accounts, and newsletters.Fox Renderfarm has close cooperation with multiple excellent CG studios and artists worldwide, come and shine your talent on the global stage!How to submitJoin CG & VFX Artist Facebook group, post your artwork in the group with tags FGT3D and FGT3DEasterEgg2020. Or send your artwork to fgt3d@foxrenderfarm.com with your name and/or the studio’s name.RulesYour entry must relate to the challenge’s theme (we strongly encourage you to set your imagination free)Your entry must be a 3D rendered imageYour entry can be created by one artist or a groupThere’s no limitation on styles and the choices of software and pluginsYour entry must be original art created specifically for the challenge (no existing projects)Minimal use of third party assets is allowed, as long as they are not the main focus of your scene (third party textures and materials are not included in this p and can be used freely)No fanart allowedFeel free to enhance your renderingImages that depict hate, racism, sexism or other discriminatory factors are not allowedWorks must be submitted before the deadline

Recommended reading

Top 9 Best And Free Blender Render Farms of 2025

2024-12-30

Revealing the Techniques Behind the Production of Jibaro "Love, Death & Robots", Which Took Two Years to Draw the Storyboard

2025-02-10

Top 10 Free And Best Cloud Rendering Services in 2025

2025-03-03

Top 8 After Effects Render Farm Recommended of 2025

2025-02-10

Top 5 Best and Free 3d Rendering Software 2025

2025-02-10

Shocked! The Secret Behind Using 3D to Make 2D Animation was Revealed!

2025-02-10

How to Render High-quality Images in Blender

2024-12-04

Easy Cel Shading Tutorial for Cartoon in Blender Within 2 Minutes

2025-02-10

Partners