Fox Renderfarm Blog

Top 4 Best GPU and CPU Render Farms in 2025

GPU Render Farm

Why Do You Need a Render Farm?To render a 3D project, animations and visualizations must be calculated to define light, reflections or shadows. For this computational process, time and computational performance are required in addition to the 3D application.On average, an animation of 1 second in length consists of 30 individual frames. This means that 1800 individual frames is the average number calculated for a sequence of 1 minute in length. Depending on the complexity of the scene, the calculation of individual frames can take anywhere from a few seconds to several minutes or even hours. Complex scenes with complex lighting and texture calculations require a significant amount of time to complete the calculation of frames. For example, a local computer can render a single frame of a complex scene in 10 minutes, and it takes approximately 13 days to calculate 1800 frames. Computers run at full capacity and are unlikely to be used during this time.Investing in a powerful workstation or render farm requires a lot of money, and in some cases it would be a waste of money not to use the render farm to its fullest potential. In addition to purchasing powerful hardware, you will also need to pay for electricity, maintenance costs and software licenses for each node.The use of gpu render farm or cpu render farm can therefore be very helpful in these situations. Some of the obvious benefits to be gained from using a render farm are time savings and lower rendering costs.What Factors Influence the Choice of a Render Farm?Easy to UseOne set of UI/UX platforms supports App, 3d App Plugin, Web;A set of pipelines and workflows has the benefit of easy integration of usability results;A set of upload speed/upload items, joining FTP, sFTP, Dropbox, Google Drive, One Driver, etc.;SaaS and IaaSIaaS-based render farms offer full control over remote servers and allow clients to install any software and customize projects as required (for complex pipelines and large projects).SaaS-based render farms are simpler to use, however, when rendering goes wrong, the end user cannot adjust the project (for simple workflows and smaller projects).Compatibility with Software Versions and PluginsCheck out supported software, plugins, rendering enginesPriceA set of prices, actual costs, hidden costs, estimated costsRendering SpeedHow many nodes are available for rendering your job?What CPU and GPU types are offered?OtherA set of analysis speed and data logging (reports), price and time estimates;A set of support services: fast response, multiple support options, availability of human participants, etc.), true 24/7 availability;Criteria for discounts, promotions, regularity, trials, etc.;Security standards, policies and guidelines for privacy protection;Standards for payment methods, claims and refunds;Top 4 Best GPU and CPU Render Farms in 2025FOX RENDER****FARM-Best GPU and CPU Render FarmSupported programs:Pros:Offers CPU and GPU cloud services;Free $25 credit to try their services after creating an account;Supports 2 types of submission: web submission and desktop client submission (Windows, Linux OS);Supports a large number of major 3D software and renderers;Acceptable prices;24/7 online technical support;Well-documented FAQs and tutorials;Support for free data transfer tools;Cons:Complex UI/UX and scene uploads;Lack of hardware details;SaaS function only;2. REBUSFARMSupported Programs:Pros:GPU and CPU render farm services available;Free trial of 25 RenderPoints for cloud rendering for every new registrant, worth $30.01;User-friendly UI/UX interface, easy to upload scene files;Large number of supported 3D packages;Reasonable pricing and frequent discounts;Accurate online price calculator;24/7 support;Well-documented FAQs and tutorials;Strong support for CPU-based rendering;Cons:SaaS function only3. GARAGE FARMSupported Programs:Pros:GPU and CPU render farm services available;Reasonable prices;A large number of supported 3D packages;Free $25 render credit for SaaS-based render farms;Well documented tutorials and a great forum;IaaS and SaaS features available;24/7 support;Cons:Limited animation cost calculator;Complex UI/UX and scene uploads;OctaneRender via SaaS is not currently supported;No support for free data transfer for IaaS users;4. IRENDER FARMSupported Programs:The iRender Farm is an IaaS render farm where you can install any software and application on a remote server.Pros:CPU and GPU cloud services available;After creating an iRender account, try their services with a free $5 rendering credit;Allows users to take full control of the physical server's IaaS capabilities;Supports any application running on Windows or Ubuntu operating systems;Support for free data transfer tools;Support for storing users' workspaces;Support for RTX 2080Ti and RTX 3090 cards with NVLink;24/7 support;Cons:Limited FAQ documentation;IaaS function only;The Best Render Farm for Specific 3D SoftwareBest Render farm for BlenderHere are the top 3 best render farm for blender:Fox Render****farmRebus FarmGarage FarmBest Redshift Render FarmFox Render Farm has tested and selected the top 3 Redshift render farms for Maya, Cinema 4D, Blender and other 3D software.Fox Render****farmiRenderRebus FarmFox Render****farm hopes it will be of some help to you. As you know, Fox Render****farm is an excellent cloud rendering services provider in the CG world, so if you need to find a GPU render farm or CPU render farm, why not try Fox Render****farm, which is offering a free $25 trial for new users? Thanks for reading!

Full List of GPU Render Farm For You to Choose

GPU Rendering

The most significant difference between CPU and GPU rendering is that CPU rendering is more accurate, but GPU rendering is faster. Each of the two renderings has its own points. So are you looking for a GPU render farm for your 3D software?In this article, Fox Render****farm, the leading cloud rendering service provider and GPU render farm, will introduce to you 8 GPU render farms.What is GPU rendering?Top 8 Best GPU Render Farms1. Fox Render****farm - Best GPU Render FarmFox Render****farm, a TPN-Accredited render farm, supports both CPU and GPU rendering. As a GPU render farm, Fox Render****farm not only supports popular 3D software, but also most GPU renderers, such as Redshift and Blender Cycles.The price of Fox Render****farm is as low as 0.0306/core hour and offers a price estimator. It offers a free $25 trial for all new registrants.In addition, Fox Render****farm frequently hosts 3D challenges, such as the monthly FGT Art, and if your artwork is chosen as the feature work can be rewarded with $200 render coupon.2. Rebus Farm - Powerful GPU Render FarmRebusFarm supports all common 3D applications, as well as CPU and GPU rendering. Its GPU render cloud runs with NVIDIA's Quadro RTX 6000. RebusFarm also offers a free trial for $25.50.RebusFarm offers prices as low as 1.22 cent/GHzh.3. iRender - Strong GPU Render FarmiRender is an IaaS and PaaS provider as well as a GPU render farm. It supports almost all 3D software such as Cinema 4D, Houdini, Maya, 3ds Max, Blender, etc. and is optimized for (Redshift, Octane, Blender, Vray, Iray, etc.) multi-GPU rendering tasks.iRender charges based on time of use and supports 24/7 live chat.4. Garagefarm - Cloud-based GPU Render FarmGarageFarm is a fully automated cloud-based CPU and GPU render farm. GarageFarm allows you to easily upload and manage your projects. He supports most 3D software and plugins such as 3ds Max, Maya, Cinema4D, After Effects and Blender. GarageFarm's GPU nodes come with 8x Tesla K80 cards and 128 GB RAM. It offers a $25 free trial and 24/7 customer Live chat.5. Ranch Computing - CPU and GPU Render FarmRanch Computing is a render farm that supports both CPU and GPU rendering. Ranch Computing supports 3ds Max, Blender, Cinema 4D, Houdini and other software and displays the corresponding supported versions on its website.Ranch Computing offers a free trial for 30€ and a 50% discount for academic projects. He does not support live chat, but you can chat via email or Skype.6. SuperRenders Farm - GPU Render FarmAs a GPU render farm, for GPU rendering, SuperRenders Farm is equipped with NVIDIA GTX 1080Ti for Graphic Card and 48GB - 128GB for RAM.SuperRenders Farm supports all major applications used in the industry, such as 3dsMax, Maya and C4D.Sign up for SuperRenders and you will receive a free $25 credit.7. Animarender - 24/7 GPU Render FarmAnimarender is an online rendering service that offers 24/7 live support. They support CPU and GPU rendering for all popular 3D modeling software such as 3ds Max, Cinema 4D, Maya and Blender.Animarender has its own software, AnimaManager. AnimaManager allows you to install plugins directly into your 3D software and render with one click directly from the software interface. AnimaManager supports both Windows and Mac.8. RenderStreet - Blender GPU Render Farm****RenderStreet is primarily a Blender and Modo render farm that supports both CPU and GPU rendering. Its GPU servers are equipped with top-of-the-line NVIDIA® T4 GPU cards, roughly equivalent to one RTX 3080 card per server. RenderStreet's pricing is charged either by the hour or by the month. You can use only $1 for its one-day trial.SummaryThe best GPU render farm is the one that fits your needs. If you're still confused, try Fox Render****farm, a powerful GPU render farm and leading cloud rendering service provider, offering a $25 free trial!

Where can I get GPU rendering services?

GPU Render Farm

With the development of computer technology, people are increasingly demanding the processing of graphics and images. Especially the emerging 3D technology has enabled graphic image processing and 3D computing to be applied to various video games and movie industries. Rendering is an essential part of 3D production.V-Ray RT GPU fast rendering display from Dabarti CGI StudioRendering mainly refers to the process of generating images through a software model. The program calculates the geometry, vertices, and other information of the graphic to be drawn, and then obtains the image. The first thing to confirm is that both the CPU and the GPU can perform rendering tasks, but GPU-Based Rendering can be rendered using a graphics card. Because the GPU is capable of a large number of operations, GPU rendering accounts for more and more in the fields of graphical interfaces and 3D games.As GPU renderers are increasingly recognized by many CG professionals, GPU-enabled renderers include Redshift, Blender's Cycles, Octane Render, NVIDIA Iray, and more. Therefore, in addition to supporting CPU rendering services, many render farms also begin to support GPU rendering services.V-Ray RT GPU fast rendering display from Dabarti CGI StudioWhere can I get GPU rendering services? As the world's leading cloud rendering service provider and render farm, Fox Render****farm, not only supporting CPU rendering services, but also supporting GPU rendering services.Fox Render****farm offers massive rendering nodes at prices as low as $0.0306 per core hour. Boost your 3D production, 1 month rendered in 5 mins, welcome to get $25 free trial now.

Three Aspects to See the Differences Between GPU and CPU Rendering (1)

CPU Rendering

The fast cloud rendering services provider and GPU &x26; CPU render farm, Fox Render****farm will tell you the difference between GPU and CPU rendering.The Background of Graphics CardProbably around the year of 2000, the graphics card was still referred as the graphics accelerator. If something is referred as “accelerator”, it is usually not a core component, just think about Apple’s M7 coprocessor. As long as there is a basic graphics output function you are perfectly fine to access the monitor. By then, only a few high-end workstations and home use consoles could see separate graphics processors. Later, followed by the increasing popularity of PC, and the development of games and monopoly software like Windows, hardware manufacturers started to simplify their work process, which subsequently leads to the fact that graphics processors, or we can say graphics, gradually became popular.The GPU manufactures exist as much amount as CPU manufactures, but only three of them(AMD, NVIDIA, and Intel) are familiarized and recognized by people. To see the difference between GPU and CPU rendering, let's start with the following three aspects.1. The Functions of GPU and CPUTo understand the differences between GPU and CPU, we also need to first understand what GPU and CPU are signed up for. Modern GPU’s functionality covers all aspects of the graphics display. You might have seen the below picture before, that is a trial test of the old version Direct X - a rotating cube. Displaying such cube takes several steps. Let's think fromabout a simple start. Imagine the cube is unfolded to an “X” image excluded line shape. Then imagine the line shape to eight points without the connecting lines (The eight points of the cube). Then we can go ahead to figure out how to rotate the eight points so as to rotate the cube. When you first create this cube, you must have created the coordinates of the eight points, which are usually represented by vectors, three-dimensional vectors, at least. Then rotate these vectors, which are represented by one matrix if it’s in linear algebra. And vector rotation is to multiply the matrix and vectors. Rotating these eight points equals to multiply the vectors and matrix by eight times. It is not complicated. It is nothing more than calculation, with a large calculating amount. Eight points represent eight times calculations, so does 2000 points means 2,000 times calculation. This is part of the GPU's work — the transformation of vertex, is also the simplest step. But there is so much more than that.In conclusion, CPU and GPU are signed up for different tasks and are applied for different scenarios. CPU is designed with strong commonality and logical analysis functionality to deal with a variety of data types, to categorize a large workload of data and to handle process interruption. All of the above leads to an extremely complicated CPU internal infrastructure. But GPU is specialized in a highly-consistent, independent large-scale data and concentrated computing external environment that can not be interrupted. So CPU and GPU show a very different infrastructure (schematic diagram).Now please follow the best CPU &x26; GPU render farm and cloud rendering services provider to our next part: Three Aspects to See the Differences Between GPU and CPU Rendering (2).

Fox Renderfarm Launches GPU Rendering

Blender Cycles

Rendering and previewing in a flash! The craze for Marvel’s superhero movie Deadpool swept over the world.As the first full CGI realistic human feature film in Asia, Legend of Ravaging Dynasties dominated the headlines once the trailer came out.These two movies were rendered with GPU rendering engines.Obviously, GPU computing card and GPU rendering engines are gradually used in film production. It is a good start!Now, as the leading render farm in the industry, Fox Render****farm launches GPU rendering. Let’s start free trial with Fox Render****farm’s GPU rendering. Let’s get it started!What’s the differences between GPU and CPU?A simple way to understand the difference between a CPU and GPU is to compare how they process tasks. A CPU consists of a few cores optimized for sequential serial processing, while a GPU has a massively parallel architecture consisting of thousands of smaller, more efficient cores designed for handling multiple tasks simultaneously. Adam Savage and Jamie Hyneman made a painting demonstration to show the difference between CPU and GPU:Mythbusters Demo GPU versus CPUWhat’s the advantage of GPU Rendering ?In the field of graphics rendering, not only films and animations, but also CG art, GPU with its computing ability and architecture specially designed for graphics acceleration provides the users with a more efficient rendering solution, namely the GPU rendering solution. GPU rendering has great advantage of fast speed and low cost. Moreover, GPU rendering becomes more and more available now, lots of works with high quality rendered with GPU has come out. GPU rendering tends to be popular with users at home and abroad.Thinking of the CPU as the manager of a factory, thoughtfully making tough decisions. GPU, on the other hand, is more like an entire group of workers at the factory. While they can’t do the same type of computing, they can handle many, many more tasks at once without becoming overwhelmed. Many rendering tasks are the kind of repetitive, brute-force functions GPUs are good at. Plus, you can stack several GPUs into one computer. This all means GPU systems can often render much, much faster!There is also a huge advantage that comes along in CG production. GPU rendering is so fast it can often provide real-time feedback while working. No more going to get a cup of coffee while your preview render chugs away. You can see material and lighting changes happen before your eyes.GPU Renderer 1.Redshift is the world’s first fully GPU-accelerated, biased renderer and it is also the most popular GPU renderer. Redshift uses approximation and interpolation techniques to achieve noise-free results with relatively few samples, making it much faster than unbiased rendering. From rendering effects, Redshift can reach the highest level of GPU rendering, and render high quality movie-level images.2.Blender Cycles is Blender’s ray-trace based and unbiased rendering engine that offers stunning ultra-realistic rendering. Cycles can be used as part of Blender and as stand-alone, making it a perfect solution for massive rendering on clusters or at cloud providers.3.NVIDIA Iray is a highly interactive and intuitive, physically based rendering solution. NVIDIA Iray rendering simulates real world lighting and practical material definitions so that anyone can interactively design and create the most complex of scenes. Iray provides multiple rendering modes addressing a spectrum of use cases requiring realtime and interactive feedback to physically based, photorealistic visualizations.4.OctaneRender is the world’s first and fastest GPU-accelerated, unbiased, physically correct renderer. It means that Octane uses the graphics card in your computer to render photo-realistic images super fast. With Octane’s parallel compute capabilities, you can create stunning works in a fraction of the time.5.V-Ray RT (Real-Time) is Chaos Group's interactive rendering engine that can utilize both CPU and GPU hardware acceleration to see updates to rendered images in real time as objects, lights, and materials are edited within the scene.6.Indigo Renderer is an unbiased, physically based and photorealistic renderer which simulates the physics of light to achieve near-perfect image realism. With an advanced physical camera model, a super-realistic materials system and the ability to simulate complex lighting situations through Metropolis Light Transport, Indigo Renderer is capable of producing the highest levels of realism demanded by architectural and product visualization.7.LuxRender is a physically based and unbiased rendering engine. Based on state of the art algorithms, LuxRender simulates the flow of light according to physical equations, thus producing realistic images of photographic quality.GPU Computing Card Parameter Table Now Fox Render****farm is applicable to Redshift for Maya and Blender Cycles. There are more than 100 pieces of NVIDIA Tesla M40 cards in Fox Render****farm cluster, each server has 128G system memory with two M40 computing cards. Welcome to Fox Render****farm to experience the super fast GPU cloud rendering!

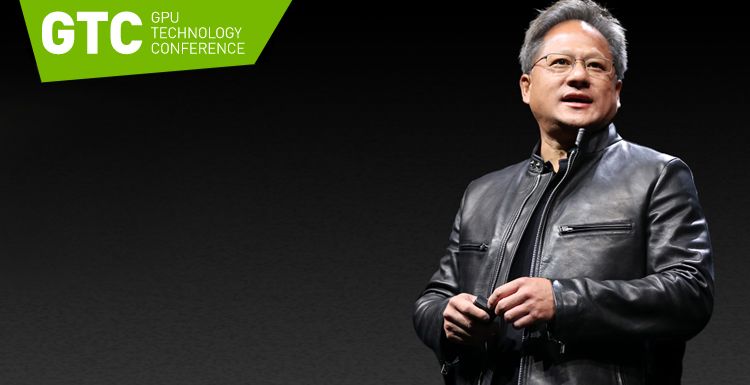

NVIDIA GTC China Conference Focuses on AI, Autonomous Driving, Gaming, and HPC

NVIDIA Iray

On December 18th, Suzhou,that it was the annual NVIDIA GTC China Conference. This time, NVIDIA founder and CEO Jensen Huang focused on four major themes: artificial intelligence (AI), automotive, games and HPC.Jensen Huang said that this is the largest GTC China to date, with 6,100 participants, a 250% growth in attendees in 3 years.Jensen Huang announced a series of new NVIDIA products and cooperation progress, the core content is as follows:Baidu and Ali use the NVIDIA AI platform as the recommendation system;Launched the seventh-generation inference optimization software, TensorRT 7, to further optimize real-time conversational AI. The inference latency on the T4 GPU is 1/10 of the CPU;The NVIDIA AI inference platform has been widely used worldwide;Launch software-defined AV platform, next-generation autonomous driving and robotic SoC Orin, with a computing power of 200 TOPS, and plan to start production in 2022;Open source NVIDIA DRIVE autonomous vehicle deep neural network to the transportation industry and launch NVIDIA DRIVE pre-trained model on NGC;Didi will use NVIDIA GPUs to train machine learning algorithms in the data center, and use NVIDIA DRIVE to provide inference capabilities for its L4 self-driving cars;Launch a new version of the NVIDIA ISAAC software development kit SDK, which provides updated AI perception and simulation functions for robots;Announced six games supporting RTX technology;Tencent cooperates with NVIDIA to launch the START cloud gaming service to bring computer gaming experience to the cloud in China;Announced that Rayvision(Fox Renderfarm) Cloud Rendering Platform, the largest cloud rendering platform in Asia, will be equipped with NVIDIA RTX GPUs, and the first batch of 5000 RTX GPUs will be launched in 2020;Released the Omniverse open 3D design collaboration platform for the construction industry (AEC);For genome sequencing, Jensen Huang released CUDA accelerated genomic analysis toolkit NVIDIA Parabricks.AI: Launched in Baidu and Ali recommendation system, launches next-generation TensorRT softwareSince Alex Krivzhevsky used the NVIDIA Kepler GPU to win the ImageNet competition in 2012, NVIDIA has improved training performance by 300 times in 5 years.With the combination of Volta, new Tensor Core GPU, Chip-on-wafer package, HBM 3D stack memory, NVLink and DGX system, NVIDIA is helping more AI research.AI will scale from the cloud to the edge. NVIDIA is building a platform for each of the following use cases: DGX for training, HGX for hyperscale clouds, EGX for the edge, and AGX for autonomous systems.1.Baidu and Ali recommendation systems use NVIDIA GPUsJensen Huang said that one of the most important machine learning models of the Internet is the recommendation system model.Without a recommendation system, people cannot find what they need from hundreds of millions of web searches, billions of Taobao products, billions of TikTok short videos, various online news, tweets and photos.Deep learning enables automatic feature learning, supports unstructured content data, speeds up latency, and increases throughput by accelerating.Generally speaking, making a recommendation system faces two major challenges: complex model processing tasks brought by massive data, and real-time requirements for users to immediately see the recommendation results.In response to this problem, Baidu proposed an AI-Box solution to train advanced large-scale recommendation systems.Baidu AI-Box is a Wide and Deep structure. It uses the NVIDIA AI platform to train terabytes of data based on the NVIDIA GPU. At the same time, it is faster than the CPU. The training cost is only 1/10 of the CPU, and it supports larger-scale model training.Similarly, the recommendation system made by Ali also uses the NVIDIA AI platform.On the day of "Singles’ Day" this year, Ali's sales exceeded 38 billion U.S. dollars in goods. There were about 2 billion categories of goods listed on the e-commerce website, and 500 million users were shopping. The sales reached 268.4 billion a day. One billion referral requests.If a user spends 1 second viewing a product, it will take 32 years to view all the products.In this regard, Ali uses the NVIDIA T4 GPU to train the recommendation system, which makes every time a user clicks on a product, he will see other related recommended products.Originally, the CPU speed was slower, only 3QPS, and the NVIDIA GPU increased the speed to 780QPS.2.Introduced the seventh-generation inference optimization software TensorRTAt the scene, Jensen Huang announced the official launch of the seventh-generation inference optimization compiler TensorRT 7, which supports RNN, Transformer, and CNN.TensorRT is NVIDIA's acceleration software for the inference phase of neural networks. It can greatly improve performance by providing optimized AI models.TensorRT 5 released at the GTC China conference last year only supports CNN and only 30 kinds of transformations, while TensorRT 7 has made a lot of optimizations for Transformer and RNN, can achieve efficient operations with less memory, and supports more than 1,000 calculation transformations and optimizations.TensorRT 7 can integrate horizontal and vertical operations. It can automatically generate code for a large number of RNN configurations designed by developers, fuse LSTM units point by point, and even fuse across multiple time steps, and do automatic low-precision inference as much as possible.In addition, NVIDIA introduced a kernel generation function in TensorRT 7, which can generate an optimized kernel with any RNN.Conversational AI is a typical example of the powerful features of TensorRT 7.Its function is very complicated. For example, a user speaks a sentence in English and translates it into Chinese. This process needs to convert spoken English into text, understand the text, then convert it into the desired language, and then synthesize it by speech. Turn this text into speech.A set of end-to-end conversational AI processes may consist of 20 or 30 models, using various model structures such as CNN, RNN, Transformer, autoencoder, and NLP.Reasoning conversational AI, the reasoning delay of the CPU is 3 seconds, and now using TensorRT 7 to complete the reasoning on the T4 GPU is only 0.3s, which is 10 times faster than the CPU.3.The NVIDIA AI platform is widely usedIn addition, Kuaishou, Meituan, and other Internet companies are also using the NVIDIA AI platform as a deep recommendation system to improve click-through rates, reduce latency and increase throughput, and better understand and meet user needs.For example, users of Meituan want to find a restaurant or hotel are all achieved by the user's search.Conversational AI requires programmability, extensive software rollout, and low GPU latency. The NVIDIA AI platform including these models will provide support for the smart cloud.NVIDIA EGX is an all-in-one AI cloud built for edge AI applications.It is designed for streaming AI applications, Kubernetes container orchestration, security of dynamic and static data, and is connected to all IoT clouds.For example, Walmart uses it for smart checkout, the US Postal Service sorts mail through computer vision on EGX, and Ericsson will run 5G vRAN and AI Internet of Things on EGX server.Launched a new generation of automotive SoCs with a computing power of 200 TOPSNVIDIA DRIVE is an end-to-end AV autonomous driving platform. The platform is software-defined rather than a fixed-function chip, enabling a large number of developers to collaborate in a continuous integration and continuous delivery development mode.Jensen Huang stated that he would open-source the deep neural network of NVIDIA DRIVE self-driving cars to the transportation industry on the NGC container registration.The next-generation autonomous driving processor ORIN has 7 times the computing power of XavierNVIDIA released NVIDIA DRIVE AGX Orin, a new generation of autonomous driving and robotic processor SoCs that meets system safety standards such as ISO 26262 ASIL-D. It will include a series of configurations based on a single architecture and is scheduled to begin production in 2022.Orin condenses the four-year effort of the NVIDIA team, which is used to process multiple high-speed sensors, sense the environment, create a model of the surrounding environment and define itself, and develop an appropriate action strategy based on specific goals.It uses a 64-bit Arm Hercules CPU with 8 cores, 17 billion transistors, and a new deep learning and computer vision accelerator. Its performance reaches 200TOPS, which is almost 7 times higher than the previous generation technology (Xavier).It has easy programming, is supported by rich tools and software libraries, and has new functional safety features that enable CPU and GPU lockstep operation and improve fault tolerance.The Orin series can be extended from L2 to L5, compatible with Xavier, and can make full use of the original software, so developers can use products that span multiple generations after a one-time investment.Its new feature is to improve the low-cost version for OEMs, that is, they want to use a single camera for L2 level AV, and at the same time can use the software stack in the entire AV product line.In addition to chips, many technologies such as NVIDIA's platform and software can be applied in automobiles, helping customers customize applications to further improve product performance.2.Introduced NVIDIA DRIVE pre-trained modelJensen Huang also announced the launch of the NVIDIA DRIVE pre-training model on NGC.A normal running safe autonomous driving technology requires many AI models, and its algorithms are diverse and redundant.NVIDIA has developed advanced perception models for detection, classification, tracking, and trajectory prediction, as well as perception, localization, planning, and mapping.These pre-trained models can be registered and downloaded from NGC.Didi selects NVIDIA autonomous driving and cloud infrastructureDidi Chuxing will use NVIDIA GPUs and other technologies to develop autonomous driving and cloud computing solutions.Didi will use NVIDIA GPUs to train machine learning algorithms in the data center, and will use NVIDIA DRIVE to provide inference capabilities for its L5 self-driving cars.In August this year, Didi upgraded its autonomous driving department to an independent company and launched extensive cooperation with industry chain partners.As part of the AI processing of Didi Autopilot, NVIDIA DRIVE uses multiple deep neural networks to fuse data from various sensors (cameras, lidar, radar, etc.) to achieve a 360-degree understanding of the surrounding environment of the car and plan to get a safe driving path.In order to train more secure and efficient deep neural networks, Didi will use NVIDIA GPU data center servers.Didi Cloud will adopt a new vGPU license model, which aims to provide users with better experience, richer application scenarios, more efficient, more innovative and flexible GPU computing cloud services.4.Release NVIDIA ISAAC Robot SDKFor the field of robotics, Jensen Huang announced the launch of the new NVIDIA Isaac robotic SDK, which greatly speeds up the development and testing of robots, enabling robots to obtain AI-driven sensing and training functions through simulation, so that robots can be tested in various environments and situations And validation, and save costs.Isaac SDK includes Isaac Robotics Engine (provides application framework), Isaac GEM (pre-built deep neural network models, algorithms, libraries, drivers, and APIs), a reference application for indoor logistics, and the introduction of Isaac Sim to train robots. The generated software can be deployed into real robots that run in the real world.Among them, camera-based perceptual deep neural networks include models such as object detection, free space segmentation, 3D pose estimation, and 2D human pose estimation.The new SDK's object detection has also been updated through the ResNet deep neural network, which can be trained using NVIDIA's migration learning toolkit, making it easier to add new objects to detect and train new models.In addition, the SDK provides a multi-robot simulation. Developers can put multiple robots into the simulation environment for testing. Each robot can run a separate version of the Isaac navigation software stack while moving in a shared virtual environment.The new SDK also integrates support for NVIDIA DeepStream software. Developers can deploy DeepStream and NVIDIA GPUs at the edge AI that supports robotics applications to process video streams.Robot developers who have developed their own code can connect their software stack to the Isaac SDK and access routing Isaac functions through the C API, which greatly reduces programming language conversion. C-API access also allows developers to use the Isaac SDK in other programming languages.According to Jensen Huang, domestic universities use Isaac to teach and study robotics.5.NVIDIA's automotive ecosystemNVIDIA has been in the automotive field for more than 10 years and has done a lot with its partners, so that the brain of the AI can better understand and even "drive" vehicles.After continuous simulation, testing, and verification, and after confirming that the system works, NVIDIA and partners can really apply it to the actual road.Whether it's a truck company, a regular car company, or a taxi company, you can use this platform to customize your software for specific models.NVIDIA provides transfer learning tools that allow users to train models in-house and use TensorRT for re-optimization.In addition, NVIDIA has developed a federal learning system, which is particularly useful for industries that value data privacy.Whether it is a hospital, a laboratory, or a car company, after developing a training neural network, you can only upload the processed results to some global servers, while keeping the data locally to ensure data privacy.Gaming: Launches START Cloud Gaming Service with Tencent"Minecraft" is the world's best-selling video game. It has recently reached 300 million registered users in China. NVIDIA and Microsoft jointly announced that "Minecraft" will support real-time ray tracing (RTX) technology. Currently, NVIDIA RTX technology has been supported by several of the industry's most popular rendering platforms.People want more lightweight and thin gaming notebooks. For this, NVIDIA created the Max-Q design, combining ultra-high GPU performance and overall system optimization, so that powerful GPUs can be used for thin and light notebooks.This year, China shipped more than 5 million gaming notebooks, a four-fold increase in five years. GeForce RTX Max-Q notebooks are the fastest growing gaming platform.In addition, Jensen Huang announced that Tencent and NVIDIA have launched the START cloud gaming service to bring computer gaming experience to the cloud in China.NVIDIA GPU will provide support for Tencent's START cloud gaming platform. Tencent plans to expand the platform for millions of players to provide them with the same gaming experience as local game consoles, even without using a terminal with insufficient performance.The NVIDIA RTX platform includes more than 40 products for content workers, ranging from Max-Q thin and light notebooks equipped with GeForce RTX 2060, to workstations equipped with 4-way SLI Quadro RTX 8000 and servers equipped with 8-way RTX 8000.Jensen Huang announced that the Rayvision(Fox Renderfarm**) cloud rendering platform will be equipped with NVIDIA RTX GPUs, and the first batch of 5,000 RTX GPUs will be launched in 2020.This is the largest cloud rendering platform in Asia. It has rendered three of China's most popular movies in 2019, "Wolf Warrior 2", "Nezha(2019)” and "The Wandering Earth ", and more than 85% of Chinese film studios are Rayvision's customers.Jensen Huang also released the Omniverse open 3D design collaboration platform for the construction industry (AEC). Both local and cloud support the addition of real-time collaboration capabilities in AEC workflows. It will support mainstream AEC applications such as Autodest REVIT, Trimble SketchUP and McNeel Rhino.A demo using OMNIVERSE AEC was demonstrated on the spot. China Resources Tower was designed by KPF Architects to perform real-time rendering on a server equipped with 8-way RTX 8000.HPC: CUDA Accelerated Genomic Analysis Toolkit for Genome SequencingNVIDIA's applications in HPC are also very rich. For example, NASA, which plans to send humans to Mars in 2030, runs hundreds of thousands of simulations of Mars landing scenarios on the NVIDIA GPU through FUN3D fluid mechanics software, generating 150TB of data.For genome sequencing, Jensen Huang released CUDA accelerated genome analysis toolkit NVIDIA Parabricks.Parabricks can be used to discover mutations and can produce results consistent with industry standard GATK best practice processes, which can achieve 30-50 times speedup, and provide DeepVariant tools to detect genetic mutations using deep learning techniques.Jensen Huang said that I am pleased to announce that BGI has adopted Parabricks, and by using several GPU servers, it can process the genome at the rate at which its sequencer generates data.According to him, NVIDIA added two new mainstream applications of 5G vRAN and genome to CUDA this year, which has been supported by industry leaders such as Ericsson and BGI.In addition, Jensen Huang also mentioned once again that the cooperation between NVIDIA GPU and Arm has been announced, and now supports CUDA to accelerate Arm servers, and has announced the first reference architecture based on Arm NVIDIA HPC for ARM, which can use various Arm-based HPC servers Configure it.TensorFlow now supports acceleration on Arm. With NVIDIA CUDA on ARM, TensorFlow can achieve world-class performance and scalability.052019 is coming to an end, and NVIDIA is also at this conference. Not only did it show off its AI, automotive, gaming and HPC capabilities, it also exposed a circle of friends in many fields.Jensen Huang said that with the end of Moore's Law, GPU accelerated computing will become the future development direction, which is now recognized.NVIDIA can accelerate single-threaded processing and parallel multi-threaded processing, and optimize through the entire software stack, making multi-GPU and multi-node systems achieve incredible performance. NVIDIA has sold 1.5 billion GPUs, and each GPU is compatible with the same architecture CUDA.As the biggest beneficiary of the AI deep learning bonus so far, NVIDIA continues to enrich its AI and autonomous driving ecosystem based on high-performance software, hardware, and systems. Finding the core scene to accelerate the landing is still the current priority.

Learn What is Arnold GPU

Arnold Render Farm

Arnold is one of the most popular renderers in animation and film production. As the leading cloud rendering services provider and GPU&x26;CPU render farm, Fox Render****farm is also an Arnold render farm. With the recent release of Arnold-5.3.0.0, the first version of the Arnold GPU (beta) has been officially released. We can share some of the results and thoughts about the pre-test of the Arnold GPU.You may also want to know: How to Quickly Create an Object ID For Arnold in Maya.The Principle of Arnold GPUMore than two years ago, Marcos Fajardo (author of the Arnold) talked about the possibility of Arnold GPUs for the first time at SIGGRAPH2015. At the time, a full-platform-supported GPU renderer based on the OpenCL computing framework was designed. However, the current Arnold GPU is based on NVIDIA Optix rendering architecture, the bottom layer is CUDA language, CUDA is much more friendly than OpenCL, the development progress is certainly faster, and the most important thing is that CUDA is faster and more stable. With the release of the RTX series of graphics cards, vector intersection processing is getting faster and faster, and it is more suitable for GPU ray tracing algorithms.Arnold CPU and GPU effectsThe original purpose of Arnold GPU rendering was to keep the CPU and GPU rendering results close to each other, which is the effect and speed of the different rendering modes of the beta version.Test environment:Katana3.0 KtoA 2.3.0.0 gpu19 GeForce GTX 1080Using 8 render threadsParameter settings:AA samples = 6GI diffsue samples = 3GI specular samples = 3GI transmisson samples = 3GI diffsue depth = 2GI specular depth = 3GI transmisson depth = 8Light sampling is the defaultMetal Metal (CPU on the left, GPU on the right)Rendering time:CPU - 2m13sGPU - 10sIn summary, the metal BRDF material does not require much computing performance, and can be well calculated on the GPU, so the CPU and GPU noise is less, GPU rendering is much faster. When there are many hard surface materials in the scene, the GPU will show more powerful speed and performance without losing the rendering quality.Glass Glass (CPU on the left, GPU on the right)Rendering time:CPU - 31m57sGPU - 17sGlass BTDF material, ray tracing renderer is too slow and too inefficient. Although the Arnold GPU renders extremely fast, it is obvious that the rendering details are lost and the noise is dense. Still using the CPU to render slowly is more reliable.Subsurface scattering Subsurface scattering (CPU on the left, GPU on the right)Rendering time:CPU - 6m39sGPU - 36sSubsurface scattering(SSS), also known as BSSRDF, is the biggest surprise for Arnold GPU in so many tests. The Arnold GPU implements the random_walk BSSRDF in standard_surface.First, the Arnold 5 comes with two types of SSS:DifRandom_walkDiffusion is an empirically based SSS model that renders results faster because many of the parameters are obtained by looking up the table. This empirical model is also commonly used today. Random_walk is a complete light reflection calculation based on a real physical model.Going back to the Arnold GPU, it doesn't support diffusion but uses random_walk directly. The results are perfect, the noise is small, and the rendering is very similar to the CPU. In actual production, this is a CG production requirement that is fully qualified for film.Cornell BoxCornell_box (CPU on the left, GPU on the right)Rendering time:CPU - 23m37sGPU - 1m27sThe result is perfect, although the rendering time is slow, but the effect is amazing. Compared with Redshift, the speed is fiasco, and the effect is over. The biggest problem with Redshift is that indirect lighting tends to be too bright and dark details are not enough. The effect of the Arnold GPU is basically the same as that of the CPU. At this point, it is the best known in the current renderer (prman doesn't know).Please note here that the reason why the rendering time of the GPU is significantly faster than the CPU is because the sampling is the same, and the sampling result is the same, the noise of the GPU rendering result is significantly more than the noise of the CPU rendering result.Arnold Beta Edition LimitationsBecause Arnold is still in the early beta version, many features are not available. It should be a little early to use the Arnold GPU for production. Here are some of the bigger drawbacks.General restrictionsGPU rendering, based on the same sampling, more noise than CPUGPU rendering will read all textures into memory and video memory, not supporting streaming textureGPU rendering does not support bucket rendering, all supported AOVs are left in memoryGPU rendering does not support OpenVDBShaders restrictionsOSL Shaders is not supported yetThird-party Shaders are not supported at this timeDoes not support AOVs write, does not support write_aovLights restrictionsCylinder_light is not supported yetDisk_light is not supported at this timeMesh_light is not supported yetLight_links is not supported at this timeLight_filters is not supported at this timeThe future of Arnold GPUArnold wants to use GPU rendering for movie-level projects, but because of the GPU's own shortcomings, many renderings must be calculated using the CPU, which is more efficient. Therefore, it is very important to switch between rendering between CPU and GPU freely. Based on this, Arnold can provide high quality CPU unidirectional ray tracing rendering while providing efficient GPU Optix ray tracing rendering.A set of APIs supports CPU and GPUArnold was originally designed to use a set of APIs that are compatible with CPU and GPU rendering. Now Arnold GPUs are beginning to be compatible with some of the Maya native materials available in MtoA, at least for this, Arnold has made some progress.Support for OSL ShadersThe latest news, from the developer forum, NVIDIA is working with Sony to develop OSL GPU-based compatibility, including several important features of OSL: Closure and LPEs. Will join the Arnold GPU soon.Rendering consistencyNow it seems that Arnold still does a good job of restoring effects. GPU rendering tries to move closer to CPU rendering because the two rendering architectures are different and no longer extend.Fox Render****farm hopes it will be of some help to you. It is well known that Fox Render****farm is an excellent cloud rendering services provider in the CG world, so if you need to find a render farm, why not try Fox Render****farm, which is offering a free $25 trial for new users? Thanks for reading!Reference: MIYAZAKI

What Is the Difference Between GPU Mining and GPU Render Farm?

GPU Rendering

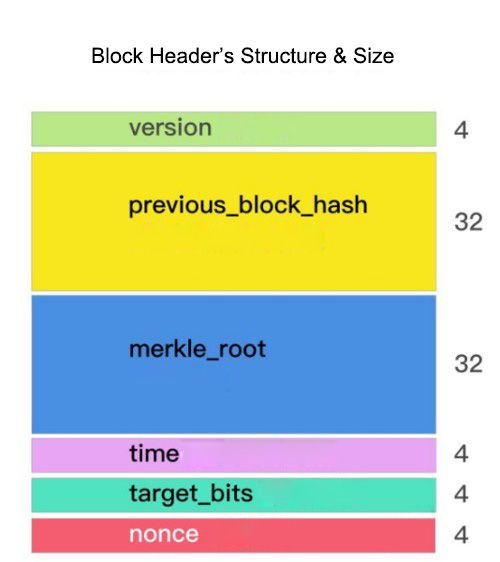

The early mining operation method is to use the CPU to calculate the hash value. As the number of blocks increases, the difficulty level of calculating the hash value increases to a certain extent. The electricity cost of the operation exceeds the return obtained by calculating the hash value, and the CPU mining method has no practical value.GPU MiningIt is to use the GPU to calculate the hash value. GPU mining has the advantage of faster computing speed than CPU mining because:The CPU has the computing burden of the system and all programs running on the system;The CPU can execute 4 32-bit (128-bit SSE instructions) operations per clock cycle, while GPUs such as the Radeon HD 5970 can perform 3200 32-bit (3200 ALU instructions) operations. The GPU operation speed is more than 800 times the CPU speed. . Although the CPU can increase its core count to 6, 8, 12, etc., the GPU is still much faster than the CPU.GPU mining is to calculate the hash value of the new block, write the transaction data, generate the block to connect to the blockchain, and obtain virtual currency returns, such as bitcoin, Ethereum, and so on.GPU rendering is the use of GPU computing units for graphics processing, such as calculating the light and material of a pixel in the projection surface of the camera, and finally coloring the pixel, obtaining image information, and writing to a file or displaying it on a display device.A GPU render farm is a network of computing nodes with many GPU devices installed, usually dedicated to GPU rendering to obtain image files. Such as the Fox Render****farm, which is a CPU &x26; GPU cloud rendering service provider.Of course, GPU render farm can be retrofitted for GPU mining.

What is a GPU render farm

GPU Render Farm

What is a GPU render farm? A simple understanding of a GPU render farm is a render farm that supports GPU rendering. At the NVIDIA conference on Wednesday, December 18, 2019, Fox Render****farm announced the launch of cloud rendering services powered by NVIDIA RTX next year. RTX has made a breakthrough in the price/performance ratio of cloud rendering on Fox Render****farm. 12 times faster and 7 times lower. After all, as of 2019, the Top3 movies in Chinese film history are all rendered by Fox Render****farm.We know that under the same conditions, CPU rendering speed is much slower than GPU rendering. Fox Render****farm is the largest render farm in Asia that both supports CPU rendering and GPU rendering. After summing up multiple rendering projects, GPU rendering can make the original Scenes that take 485 hours to render are now rendered in less than 40 hours! The GPU will increase rendering speed while saving customer costs.GPU rendering in the field of film production and animation is constantly maturing. In the production of "Deadpool", the well-known Blure Studios used GPU rendering to create a movie preview movie that is very close to the final product. Originally, "Deadpool" was not favored by the film investors, but after the short film came out, it was enthusiastically sought after by netizens. This made the producers finally decide to invest and shoot the film."We want the best preview in front of Fox Film," Margo said.Blur Studios uses Autodesk 3ds Max to produce CG asset content from scratch, and then uses the GPU rendering plug-in V-Ray RT of Chaos Group for rendering jobs, and it only runs on NVIDIA GPUs, which is 15 times faster than the time in using the CPU renderer.This is just a small example of GPU rendering applications, but it can be seen that GPU rendering will have a lot to do in the commercial rendering market.So what are the advantages of GPU rendering? How are they different?Why is there a GPU? When we talk about GPU rendering, this is an issue that cannot be bypassed. The real GPU, also known as the Graphics Processing Unit, was first proposed by NVIDIA when it released the GeForce 256 graphics processing chip in 1999. The core technologies are hardware T &x26; L, cubic environment texture mapping and vertex blending, and texture compression. And bump mapping maps, dual texture four-pixel 256-bit rendering engine, and more. The birth of GPU and the continuously evolving technology evolution since then are to meet the increasingly complex graphics computing needs and achieve a more realistic and vivid real-time sensory experience.CPU on left, GPU on right Now the GPU realizes a large number of coordinate and light source transformations through hardware T &x26; L. The CPU does not need to calculate a large amount of lighting data. It can obtain better performance directly through the graphics card. At the same time, the 3D model can be described with more polygons to obtain more delicate effects.NVIDIA GPUs helped Tim Miller of Blur Studios create realistic images.Therefore, GPU rendering has advantages in hardware support, coupled with continuous maturity and optimization in software support, making this GPU-based render farm solution have a very broad market application prospect.

What is GPU rendering?

GPU Render Farm

What is GPU rendering?Before you understand what GPU rendering is, you need to understand what rendering is.Rendering is the last step in CG production. It is the process of generating images from a software model. The program calculates the geometry, vertices, and other information of the graphic to be drawn, and then obtains the image.When performing rendering tasks, the computer's hardware can be divided into two major types of CPU and GPU. The CPU, the central processor, consists of several cores optimized for serial tasks.The GPU is a graphics processor that enables GPU rendering acceleration by calling GPU-accelerated graphics rendering and padding. It is a massively parallel architecture consisting of thousands of smaller, more efficient cores designed to handle multitasking simultaneously. This speeds up rendering because GPUs are now designed to handle a wide range of computing applications.What are the advantages of GPU rendering?In the graphics rendering field, GPUs have the advantages of faster speed and lower cost with their architecture and computing power designed for graphics acceleration. Therefore, compared with CPU, GPU rendering has the advantages of faster speed and lower cost, and the usability of GPU accelerated rendering is also increasing. GPU rendering is welcomed by more and more users.Currently available GPU renderers:Redshift:It is a GPU-accelerated, biased renderer that uses approximation and interpolation techniques to achieve noise-free rendering results with relatively few samples, and with much faster output than unbiased renderers with equivalent output effects . In terms of rendering effects, Redshift has reached the highest level of GPU rendering and can render image-quality images.Blender CyclesBlender Cycles is an unbiased render engine that uses ray tracing algorithms to provide hyper-realistic rendering. The advantage of the ray tracing algorithm is that the setting parameters are simple, the results are accurate, and the rendering time can be greatly reduced. Cycles can be used as part of Blender or as a standalone renderer.NVIDIA IrayNVIDIA Iray is a highly interactive and intuitive physics rendering technology that creates interactive, complex scenes by simulating real-world lighting and real-world materials to produce photo-realistic images.Octane RenderOctane Render is a GPU-accelerated, physics-based, unbiased renderer. This means you can get super-fast photorealistic rendering results using only the graphics card on your computer.V-Ray RTV-Ray RT is the interactive rendering engine of Chaos Group. It can use CPU and GPU hardware to accelerate, and track objects, lights, materials, etc. in real time to change scenes and automatically update dynamic coloring previews.Indigo RendererThe Indigo Renderer is a physics-based global light renderer that simulates the physical representation of light to achieve near-perfect realistic images. With advanced physical camera models, ultra-real material systems and Metropolis Light Transport for complex light environments, the Indigo Renderer meets the high standards of fidelity in architectural and product visualization.LuxRenderLuxRender is a physics-based, unbiased renderer. Based on the most advanced algorithms available today, LuxRender simulates the flow of light according to physical equations, resulting in the quality of real photography.As the powerful and affordable GPU render farm in the CG industry, Fox Render****farm supports most of the mainstream renderers, as well as CPU and GPU rendering services, massive rendering nodes supported, no need to queue, accelerate your rendering speed, and provide efficient help for your project schedule, welcome to get a $25 free trial.

Do Render Farms Use CPU or GPU?

GPU Render Farm

Within the realm of computer graphics, artists and studios often find themselves contemplating an essential question: Do render farms utilize CPU rendering, GPU rendering, or both? As render farms offer significant computational power to handle complex projects, understanding the distinction between these two rendering methods is crucial for deciding how to approach your next cloud rendering job.What are CPUs and GPUs?CPUs (Central Processing Units) are often referred to as the "brains" of a computer as they are the most important processors. They are designed to handle general-purpose computing tasks and execute instructions from software applications. A CPU typically consists of a few cores that can manage multiple tasks sequentially, which makes it highly versatile but often slower at cloud rendering tasks that require parallel processing capabilities. GPUs (Graphics Processing Units) are designed to accelerate graphics rendering. With a large number of smaller cores, GPUs can process multiple tasks simultaneously, making it ideal for parallel processing. This capability allows GPUs to handle complex graphical tasks such as 3D rendering, visual effects, and simulations much faster than CPUs. They have become the go-to choice for tasks that require substantial graphical computation and speedy results.The Difference Between CPU and GPU RenderingWhen deciding between CPU and GPU rendering, it's essential to understand the fundamental differences between the two methods.Definition: CPU rendering refers to using the computer's central processing unit to perform rendering tasks, while GPU rendering utilizes the graphics processing unit. Each method involves distinct approaches to computing.Rendering Speed: Generally, GPU rendering is faster than CPU rendering due to its ability to handle multiple tasks simultaneously. This accelerated processing leads to shorter render times, especially for high-resolution images and complex scenes.Cost: Render farms that use CPUs may be more cost-effective for basic rendering tasks, but for projects that demand quicker turnaround times, GPU rendering may justify the investment due to its efficiency.Efficiency: GPU rendering often provides greater efficiency for tasks with parallel processing capabilities, allowing artists to produce high-quality renders in less time. However, CPU rendering may still be necessary for specific applications or projects that leverage intricate calculations and require more precision.Factors to Consider When Choosing CPU or GPU Render FarmWhen selecting a render farm for your project, it's crucial to consider various factors that inform whether to use CPU or GPU rendering—render farms can also employ both methods. Some factors that can influence your decision include:Project Complexity and Urgency: If your project involves intricate visuals or requires rapid completion, GPU rendering is likely the better choice. However, if you are working on a less complex project with more time flexibility, CPU rendering can be sufficient.Rendering Engine: The rendering engine you choose significantly impacts your decision. Some engines are optimized for CPU rendering, while others are designed to leverage GPU capabilities. It's essential to align your selected engine with the rendering method that best suits your project's requirements.Budget: While GPU rendering can accelerate the process faster than CPU rendering, it might come with higher operational costs. Balancing your project's demands with your budget is vital for selecting the right render farm.Best CPU and GPU Render FarmWhen it comes to selecting a reliable render farm that supports both CPU and GPU rendering, Fox Render****farm stands out in the industry. Recognized for its high-performance capabilities, Fox Render****farm offers a robust platform that employs a mix of both CPU and GPU rendering, ensuring users have both options available for their specific project requirements. With scalable resources and flexible pricing plans, it caters to both high-end and budget-conscious projects alike. At Fox Render****farm, GPU pricing starts from $0.9 per node per hour while CPU pricing starts even lower at a rate of $0.0306 per core per hour.Additionally, Fox Render****farm supports most of the popular 3D software today, including 3ds Max, Maya, Cinema 4D, Blender, Unreal Engine, and many more. It also provides an intuitive interface and excellent 24/7 customer support, allowing users to easily navigate the cloud rendering process and maximize their workflow.ConclusionThe decision between CPU and GPU rendering ultimately depends on various factors, including project complexity, urgency, chosen rendering engine, and budget. Render farms can effectively utilize both CPU and GPU technologies, providing valuable options for artists. By understanding the strengths and weaknesses of each method, you can make informed choices to enhance your rendering workflow. If you're searching for a trusted render farm, consider trying out Fox Render****farm for an exceptional cloud rendering experience that caters to all your needs, along with a complimentary $25 trial for new users!

Recommended reading

Top 9 Best And Free Blender Render Farms of 2025

2024-12-30

Revealing the Techniques Behind the Production of Jibaro "Love, Death & Robots", Which Took Two Years to Draw the Storyboard

2025-02-10

Top 10 Free And Best Cloud Rendering Services in 2025

2025-03-03

Top 8 After Effects Render Farm Recommended of 2025

2025-02-10

Top 5 Best and Free 3d Rendering Software 2025

2025-02-10

Shocked! The Secret Behind Using 3D to Make 2D Animation was Revealed!

2025-02-10

How to Render High-quality Images in Blender

2024-12-04

Easy Cel Shading Tutorial for Cartoon in Blender Within 2 Minutes

2025-02-10

Partners